Linguistic Architecture for AI: How to Write Content Machines Understand and People Trust

The Shift We Can No Longer Ignore

By Meritxell G. Farré

Freelance Digital Marketing Strategist | SEO Content Specialist | Exploring the intersection of neuromarketing, SEO, and language psychology.

AI search hasn’t arrived with a big dramatic announcement. It has crept in quietly.

One day you search and see ten blue links, the next day you see an “AI overview” that summarises the answer for you. Tools like ChatGPT, Perplexity, Gemini and others are doing something similar in their own spaces, building answers by pulling from content that already exists.

Behind that simple answer box there is a new question for brands:Which leads us to an uncomfortable but necessary truth:

Why is this AI quoting my competitors, and not me?

It’s easy to treat this as a purely technical problem. New tools, new panels, new features. But underneath that, something more fundamental is happening. AI systems are making judgements about which content feels clear, credible, and useful enough to represent a topic.

They don’t make those judgements emotionally, the way we do. They make them through patterns in language.

That means your visibility is no longer only about who links to you or how fast your site loads. It is also about how legible your words are to two audiences at the same time:

the human brain, and

the AI model trained to approximate it.

In other words, if your content is hard to interpret linguistically, both audiences will quietly move on.

This is where linguistic architecture becomes a strategic advantage. It is the structure that helps people understand you and helps machines quote you.

Before we get into the framework, we need to be clear on what AI actually does with our words.

1. What AI Understands (and What It Doesn’t)

AI does not “understand” in the way a human does. It doesn’t have lived experience, intuition, or context from its own life. What it does have is an enormous amount of exposure to language.

When you ask a model a question, it:

looks at your words

predicts what a good answer should look like based on patterns it has seen

selects and recombines pieces of information that match those patterns

To do that effectively, it needs content that is:

clear about its topic and intent

structured in a way that is easy to follow

rich enough to show depth and authority

emotionally steady enough to look “safe” and reliable

When these signals are weak, the model has less confidence in using that text. It may still index it, but it is less likely to surface it as a quoted source or as part of an answer.

Think of it this way:

Humans skim and decide “this feels clear/helpful / trustworthy”.

AI extracts patterns and decides “this looks like the kind of text humans usually find clear / helpful / trustworthy”.

In my article The Linguistic Brain: How Language Shapes Perception, Memory, and Trust, I explored how our brains emotionally respond to language before we consciously analyse it. That emotional response heavily influences whether we keep reading.

Now we’re layering something new on top of that: the structural side of language. How the way we build sentences, paragraphs, and arguments shapes how both humans and machines classify and value our content.

That structural side is what is generally known as linguistic architecture.

2. What Is Linguistic Architecture?

Linguistic architecture is the intentional design of your language so that:

It flows naturally for the reader, and

It is structurally clear for AI-driven systems.

It’s not something separate from your “voice” or style. It’s the skeleton beneath it. The blueprint for how ideas are introduced, developed, and reinforced.

It includes:

Intent clarity: Is it obvious what this page or article is trying to do?

Information scaffolding: Is there a logical path the reader can follow?

Semantic density: Is there enough detail to show you genuinely know the topic?

Emotional coherence: Does the tone feel stable, human, and aligned with the reader’s state?

Predictable patterns: Does the structure repeat in ways that build comfort and understanding?

When these elements work together, your content does three things:

It makes the reader feel oriented and respected.

It gives AI systems strong signals about your topic, expertise, and intent.

It becomes easier to quote, summarise, and rank because it “behaves” like reliable content.

In The Missing Link Between SEO and Emotion, I showed how semantics and emotion lift content beyond mechanical optimisation. Linguistic architecture is the next step: it turns that insight into a repeatable structure.

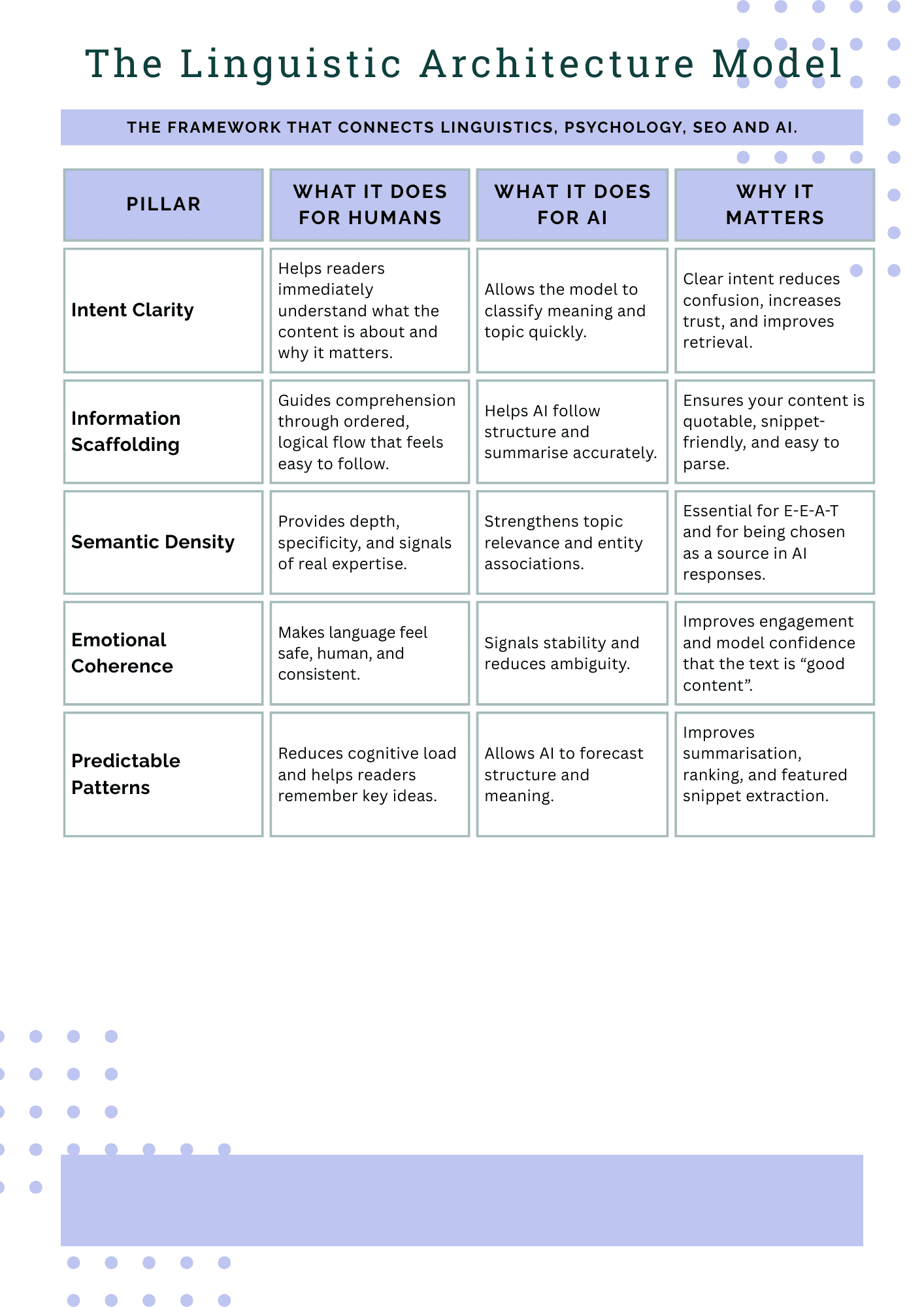

3. The Five Pillars of Linguistic Architecture

Here is the framework that connects linguistics, psychology, SEO, and AI.

Pillar 1: Intent Clarity

When someone lands on your content, they are already asking a few silent questions:

Where am I?

What is this about?

Is this relevant to my problem?

If those questions aren’t answered quickly, the reader leaves. That might look like “high bounce rate” in analytics, but at a human level, it is simply cognitive self-protection. The brain avoids spending energy on something that feels vague or irrelevant.

AI models, in their own way, behave similarly. They try to identify:

the topic

the purpose (guide, opinion, definition, comparison, etc.)

the audience

the level of depth

They do this through linguistic cues. Clear headlines, opening paragraphs that state the purpose, strong verbs (“this guide explains…”, “this article examines…”), and focused vocabulary all help.

Before:

“Our solution helps teams streamline processes and improve outcomes.”

This sentence sounds like marketing, not meaning. It has generic verbs (“helps”), generic nouns (“processes”, “outcomes”), and no hint of what the content will actually do for the reader.

After:

“This guide explains how to build a workflow that reduces manual work and unlocks measurable gains in team performance.”

Here, we know:

What the content is (a guide)

What it will help with (building a workflow)

What problem it addresses (manual work)

What outcome it aims for (measurable gains in performance)

For humans, this sets a clear expectation and respects their time.

For AI, it offers strong signals for classification and ranking.

That is intent clarity in action.

Pillar 2: Information Scaffolding

Information scaffolding is about the path you build through your content. It answers the question:

In what order should the reader encounter these ideas so that each step feels natural?

When this scaffolding is weak, the reader feels lost. You might notice this when you read something and think, “Wait, how did we get here?”

AI feels this, too. It uses headings, paragraph shape, transitions, and discourse markers (“first”, “however”, “in contrast”, “for example”) to understand how each part of your content relates to the others.

Imagine a page that jumps like this:

problem

pricing

one feature

a testimonial

another problem

half a solution

It might all be “on topic”, but it doesn’t feel cohesive.

Compare that to a pattern like:

Problem

Why it matters

What people usually try

Where those attempts fall short

A clearer alternative

Evidence or example

Humans recognise this as a narrative arc. It reduces effort because each paragraph prepares the mind for the next one. AI recognises it as a coherent structure that is easy to summarise and to pull snippets from.

Information scaffolding is the difference between a page that is technically correct and a page that is emotionally readable.

Pillar 3: Semantic Density

Semantic density is about how much meaningful information you pack into your language without overloading it.

High semantic density doesn’t mean long sentences or jargon. It means:

specific nouns instead of vague abstractions

measurable claims instead of empty promises

clear context instead of generic benefits

Before:

“Our platform is ideal for teams that want to improve productivity.”

This tells us almost nothing. What kind of teams? What kind of productivity? By how much? Through what mechanism?

After:

“Marketing teams use our platform to automate approvals, reduce manual steps, and regain an average of 6 to 10 hours per week.”

Here, we suddenly have:

a clear audience (marketing teams)

a mechanism (automate approvals, reduce manual steps)

an outcome (6 to 10 hours per week regained)

For humans, this makes the message tangible. They can visualise their week changing. The claim feels grounded and verifiable, which directly feeds trust.

For AI, the richer version includes more entities (“marketing teams”, “approvals”), clearer relationships (what the platform does), and a quantifiable effect. This strengthens the semantic network around your brand and your topic, which improves retrievability and perceived expertise.

Semantic density is what separates “fluff” from content that feels authoritative.

Pillar 4: Emotional Coherence

Emotional coherence is the sense that the language feels stable, respectful, and aligned with how the reader feels as they approach the content.

If someone is anxious about a problem, and the content feels cold or aggressively sales-focused, there is a mismatch. The brain interprets that mismatch as a kind of risk.

When tone is coherent:

Vocabulary feels appropriate to the context

Rhythm and length of sentences feel steady

The content doesn’t suddenly shift from supportive to pushy

Reassurance and clarity show up exactly where doubt would appear

For example:

“Here’s how to reduce risk without adding complexity.”

This sentence acknowledges the reader’s concern (risk), introduces a positive intention (reduce), and reassures regarding a classic objection (complexity). It is emotionally coherent with a reader who worries about trade-offs.

Now compare:

“Our risk-reduction parameters are designed to optimise operational efficiency.”

This sounds like internal documentation, not a message to a real person. It uses abstract nouns and avoids emotional reality. The information might be similar, but the emotional signal is weaker.

AI doesn’t “feel” these emotions, but it does pick up on human patterns. Content that people stay with, share, or quote often has this kind of emotional coherence. Over time, that behaviour trains the models to recognise it as quality.

So when you write with emotional coherence, you’re not just helping people. You’re leaving clearer footprints for AI as well.

Pillar 5: Predictable Patterns

Predictable patterns might sound boring, but psychologically, they are powerful.

Humans like surprise in ideas, not in structure. When the way content is organised keeps changing, the brain burns energy simply trying to orient itself. That energy is then not available for understanding or remembering.

Predictable patterns can show up in:

using similar sentence shapes when making parallel points

keeping paragraphs roughly similar in length for a section

using comparable phrasing to introduce examples or summaries

maintaining a consistent order when presenting comparisons

AI models rely on these patterns too. They segment your content into meaningful units, then learn how those units relate. When patterns are stable, they can reliably identify “this is where the definition is”, “this is where the example is”, “this is where the conclusion is”.

That makes your content easier to summarise, to quote, and to place into features like snippets or AI overviews.

Predictability in structure doesn’t limit creativity; it frees it. Because once the reader (and the model) trusts the structure, they can focus fully on the content.

4. Practical Transformations: Before & After (Explained)

Let’s look at a few concrete transformations and unpack why the “after” versions are stronger for both humans and AI.

Example 1: Opening Paragraph

Before:

“AI search is changing and brands need to adjust their strategy. There are many ways to appear in these new systems and businesses should follow best practices to get visibility online.”

This opening is vague. We don’t know:

How AI search is changing

What type of brand are we talking about

What the article will actually do for us

The language (“many ways”, “best practices”) signals generic advice. For a reader, this doesn’t create urgency or relevance. For AI, the lack of specificity makes it harder to classify.

After:

“AI search now evaluates content by how clearly it communicates intent, structure, and authority. This article breaks down the linguistic patterns that help brands appear in AI-generated responses and improve search visibility.”

Why is this better?

It names what is being evaluated (intent, structure, authority).

It states explicitly what the article will deliver (patterns that help brands appear in AI-generated responses).

It tells us why it matters (improve search visibility).

For a human, this feels precise and purposeful. They know what they’re going to get.

For AI, this provides strong topical signals: “AI search”, “content”, “linguistic patterns”, “search visibility”.

That combination of clarity and specificity is the core of linguistic architecture.

Example 2: Feature Description

Before:

“Our platform provides real-time insights that help teams work more efficiently.”

Again, this could be almost any tool. There’s nothing for the reader to hold onto. “Insights” and “work more efficiently” are generic promises. Emotionally, it doesn’t connect. Cognitively, it doesn’t inform.

After:

“The platform analyses approvals, bottlenecks, and recurring tasks to give teams real-time insight into where work slows down and how to recover up to 10 hours per week.”

Why is this stronger?

It names specific things the platform analyses (approvals, bottlenecks, recurring tasks).

It explains what the insight is about (where work slows down).

It quantifies the result (up to 10 hours per week).

For the reader, this creates a clear mental picture. They can imagine their own approvals and bottlenecks. The 10 hours make the promise concrete instead of abstract.

For AI, this version is full of meaningful signals: entities (approvals, tasks), relationships (analyses → insight → time recovered), and measurable outcome. That density helps the model understand that this is serious, useful content, not empty marketing language.

This is what I mean by specific, measurable, and emotionally coherent. The sentence respects the reader’s intelligence, and that respect is exactly what AI learns to associate with quality.

Example 3: CTA Language

Before:

“Learn more about our solution.”

This is a vague request. It doesn’t say why the reader should invest more attention, what they will see, or how much effort it requires.

After:

“See a three-minute walkthrough that shows how teams cut 6 to 10 hours of manual work each week.”

Here, several things work together:

“See a three-minute walkthrough” reduces perceived effort and time. The brain knows what it’s committing to.

“shows how” signals explanation, not pressure.

“teams cut 6 to 10 hours of manual work each week” ties the action to a specific, emotionally appealing outcome: less grind, more time.

For a human, this CTA lowers friction and increases motivation. It respects their time and makes the benefit vivid.

For AI, this sentence is rich in intent (“walkthrough”), structure (cause → effect), and measurable benefit. It looks like something people would actually click on, which again reinforces AI’s sense that this is content worth surfacing.

5. How This Shapes SEO in 2026

All of this brings us back to SEO.

Traditional SEO is not disappearing, but it’s being reframed. Keywords, backlinks, site speed, and technical hygiene remain essential. However, linguistic architecture is becoming the layer that determines who benefits most from those foundations.

Here’s how:

AI tools depend on semantic interpretation

If your content is hard to classify, it becomes background noise.

Strong linguistic architecture gives AI a clear map of your intent, your structure, and your expertise, which makes it easier for the model to include you in answers.

E-E-A-T is increasingly expressed through language

Experience, Expertise, Authoritativeness, and Trust are not just declared; they are demonstrated in how you write:

detailed stories or cases

specific results

clear authorship

confident but grounded tone

These are all linguistic choices, and AI models are learning to spot them.

Zero-click experiences favour clarity

As Google and other platforms provide more answers directly in search or summaries, they lean heavily on content that is easy to quote and condense.

If your content is:

scattered

vague

emotionally inconsistent

it is much harder to use as a source.

Human engagement trains the machine

Dwell time, scroll depth, task completion, and sharing patterns teach the algorithm what “good content” looks like. Linguistic architecture improves those metrics because it makes reading easier, richer, and more satisfying for actual humans.

The future of SEO sits where meaning, structure, and trust overlap. Linguistic architecture is the connective tissue.

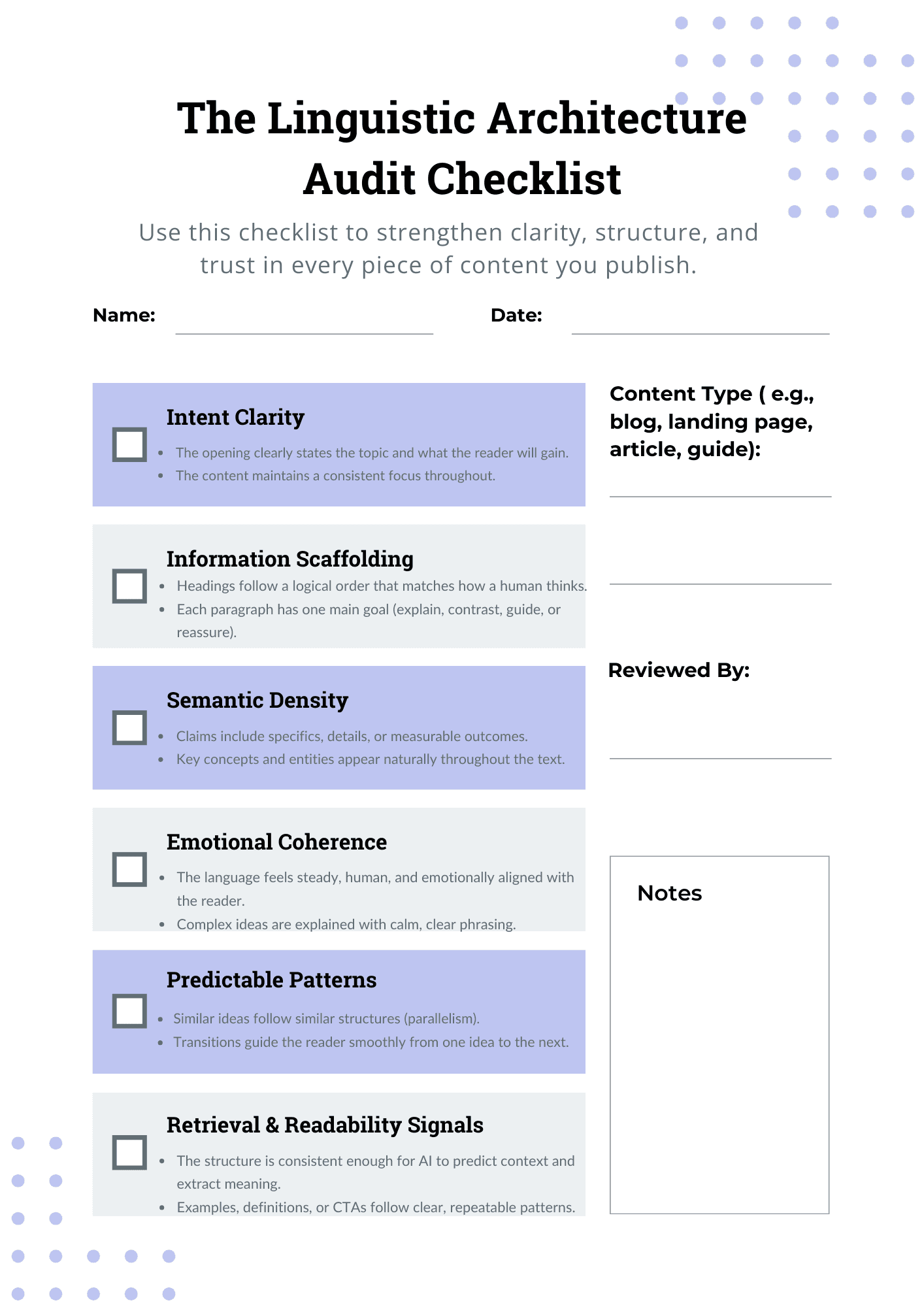

6. The Linguistic Architecture Audit (Checklist)

To turn this from theory into practice, here is a simple audit you can run on your next article or landing page.

The more “yes” answers you can give here, the stronger your linguistic architecture is.

7. Closing Reflection: The Structure Behind Trust

At a surface level, SEO looks like a technical discipline. At a deeper level, it is about communication: how clearly you can express value so that people recognise it and technology can reflect it.

Linguistic architecture lives at that deeper level. It is the structure behind trust.

When you design your language with both humans and AI in mind, you are not writing for machines. You are writing for humans in a way that machines can accurately recognise and reward.

I'm convinced that the brands that will stand out in the next few years are the ones that treat language as more than decoration. They will see it as infrastructure — carrying meaning, emotion, and authority into every interaction.

And that work begins, very simply, with the next sentence you write.